IBM announced plans to roll out a Watson-enabled cloud service designed to help media and entertainment companies extract new insights from video with a level of analysis not previously possible.

The service will make sense of unstructured data and help companies to make more informed decisions about the content they create, acquire and deliver to viewers. This content enrichment service, expected to be available later this year, will use Watson's cognitive capabilities to provide a deeper analysis of video and extract metadata like keywords, concepts, visual imagery, tone and emotional context. This is a unique offering to enter the market because it applies a range of artificial intelligence capabilities -- including language, concepts, emotions and visual analysis -- to extract insights.

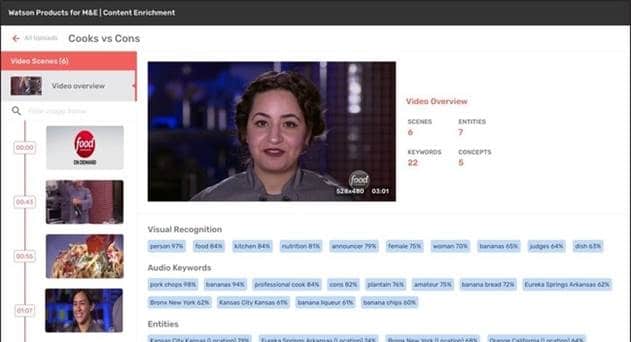

The service will use several Watson APIs, including Tone Analyzer, Personality Insights, Natural Language Understanding and Visual Recognition. In addition, it will use new IBM Research technology to analyze the data generated by Watson and segment videos into logical scenes based on semantic cues in the content. This capability identifies scenes based on a deeper understanding of content and context beyond what's available in current offerings in the market.

For example, the new offering can enable a sports network to more quickly identify and package specific basketball related content that contains happy or exciting scenes based on language, sentiment and images, and work with advertisers to promote clips of those scenes to fans prior to the playoffs. Previously, someone would have had to manually go through every piece of video to identify each piece of content and break it into scenes. Now each scene can be more quickly identified to attract viewers and advertisers for quick-turn campaigns. The new service can also be applied to repackaging specific scenes from years of TV shows to be used by an advertiser that wants its brand associated with certain moments -- like the family eating dinner, or driving in a car.

In addition, the new service could help media and entertainment companies better manage their content libraries. For example, a company might want to prioritize content that targets viewers who want more uplifting stories about world adventures. To address this need, the new service could help this company analyze their content library with a new level of detail to determine whether they are meeting this specific interest.

Steve Canepa, GM for IBM Global Telecommunications, Media and Entertainment Industry

We are seeing that the dramatic growth in multi-screen content and viewing options is creating a critical need for M&E companies to transform the way content is developed and delivered to address evolving audience behaviors. Today, we're creating new cognitive solutions to help M&E companies uncover deeper insights, see content differently and enable more informed decisions.